In recent years, the European Union has stepped up its efforts to counter disinformation, with the Code of Practice on Disinformation (CoPD) serving as a cornerstone of its approach. Originally introduced in 2018 and revised in 2022, the CoPD is a self-regulatory framework guiding Very Large Online Platforms and Search Engines (VLOPSE) in their obligations to reduce the spread and impact of disinformation. As of July 1, 2025, the Code will become effective as a formal Code of Conduct under the Digital Services Act.

This report evaluates the implementation of the CoPD between January and June 2024, focusing on the actions reported by Meta (Facebook and Instagram), Google (Search and YouTube), Microsoft (Bing and LinkedIn), and TikTok. Large online platforms are required to submit transparency reports twice a year, detailing their efforts to meet commitments under the Code. This report focuses on certain key areas such as improving transparency, supporting media literacy, enhancing fact-checking partnerships, and enabling research.

The assessment examines the extent to which these seven VLOPSEs’ services have met core commitments under the CoPD and evaluates the real-world impact of selected actions and initiatives taken under the Code. This report aims to establish an evidence-based benchmark for evaluating platforms’ compliance with the Code’s requirements and the effectiveness of their efforts. It identifies both successes and shortcomings, offering a foundation for the continued evolution of policy and future improvements in transparency and accountability measures.

The evaluation draws on multiple sources: transparency reports submitted in 2024, independent verification by EDMO researchers, and qualitative insights from a survey with experts. The analysis evaluates the progress of the VLOPSEs across eight key commitments under three pillars of the CoPD:

Empowering Users

- Commitment 17: Media literacy initiatives

- Commitment 21: Tools to help users identify disinformation

Empowering the Research Community

Commitment 28: Cooperation with researchers

Commitment 26: Access to non-personal, anonymized data

Commitment 27: Governance for sensitive data access

Empowering the Fact-Checking Community

- Commitment 30: Cooperation with fact-checkers

- Commitment 31: Fact-checking integration in services

- Commitment 32: Access to relevant information for fact-checkers

Methodology

The research, conducted by EDMO researchers from different Hubs, involved reviewing transparency reports submitted by platforms, cross-referencing claims with external sources, and documenting areas where verification was not possible. Qualitative insights were drawn from a survey distributed among media literacy experts, fact-checkers, and disinformation researchers affiliated or collaborating with EDMO. Together, these methods provide a comprehensive perspective on platform performance and accountability. Notably, this is the first initiative of its kind to combine self-reported platform data with independent verification and external expert evaluation.

The report is organized into three parts. First, when assessing Compliance, EDMO researchers examined whether platforms’ transparency reports contain comprehensive and detailed accounts of their disinformation mitigation efforts. These self-reported measures were evaluated using a standardized scale, based on a defined set of indicators (see Annex A). The reliability of the platforms’ claims was assessed through cross-referencing with external sources, including public reports and input from EDMO colleagues and collaborators, where their expertise was applicable.

Second, the basis of assessing Effectiveness is an expert survey with affiliated media literacy experts, fact-checkers, and researchers. The survey was conducted to gather qualitative insights into how these efforts are perceived by professionals in the field (see Annex C). A total of 91 experts from 25 different countries took part in the survey, representing 14 EDMO Hubs.

Conducted between December 2024 and February 2025, the survey was organized into the same thematic pillars: (1) Media Literacy/Empowering Users, (2) Research/Empowering the Research Community, and (3) Empowering Fact-Checking. Participants assessed the availability and relevance of over thirty measures—ranging from digital-skills campaigns and in-app warnings to data-access protocols and independent verification tools—and provided qualitative comments. These detailed insights were then cross-referenced with findings from transparency reports and expert feedback from EDMO’s central team and individual Hubs, laying a robust foundation for evaluating platforms’ performance and identifying gaps in support for media literacy or fact-checking, transparency, data sharing, and collaborative oversight.

Finally, the Recommendations synthesize the findings from both the assessment of completeness and verifiability of platforms’ reports and the expert survey feedback, offering policy recommendations aimed at improving the future implementation of the Code of Conduct and strengthening platform accountability.

Key Findings

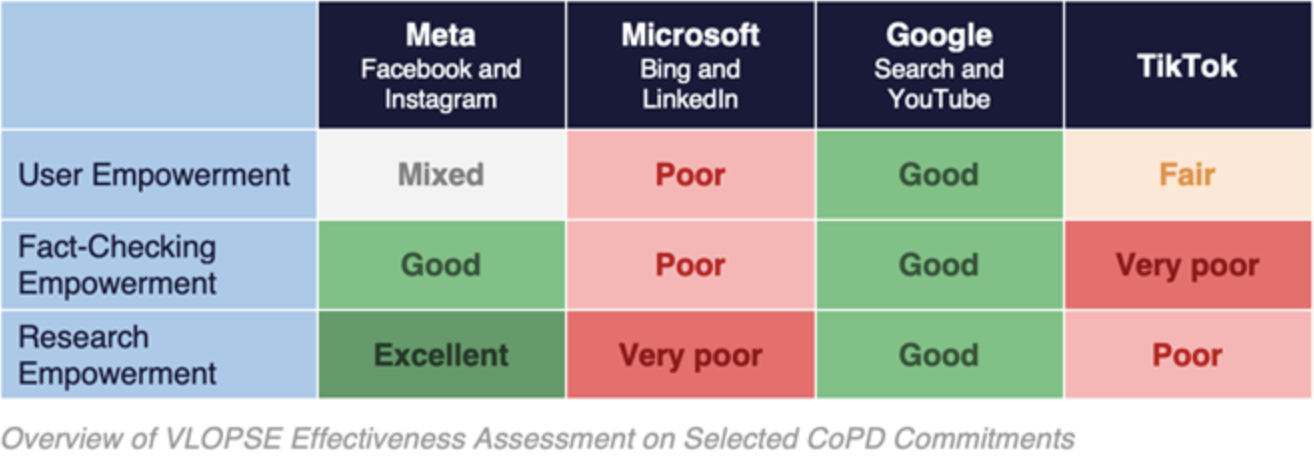

Effectiveness

Overall, as regards the effectiveness of VLOPSE initiatives and actions, the expert Survey suggests that the efforts undertaken so far remain very limited, lacking consistency, and meaningful engagement (see Table 1). While some platforms, notably Meta and Google, have launched initiatives to address disinformation, these are frequently criticized for being superficial or symbolic. In particular, some of Meta‘s statements cast doubt on its future commitment and suggest a retreat from active collaboration. In every field, most VLOPSE tend to adopt a reactive rather than a proactive stance, with little transparency or structured support for users, fact-checkers and researchers. Even when formal agreements exist, their implementation often falls short of expectations. As a result, current efforts rarely translate into long-term, systemic support for counter-disinformation strategies.

It should be noted that, by its very nature, the analysis conducted in this report only provides insights regarding the effectiveness of platforms’ measures as perceived by a representative cross-section of relevant stakeholders (researchers, fact-checkers and civil society organisations), which is not necessarily indicative of the specific views of a single category of such stakeholders.

Compliance

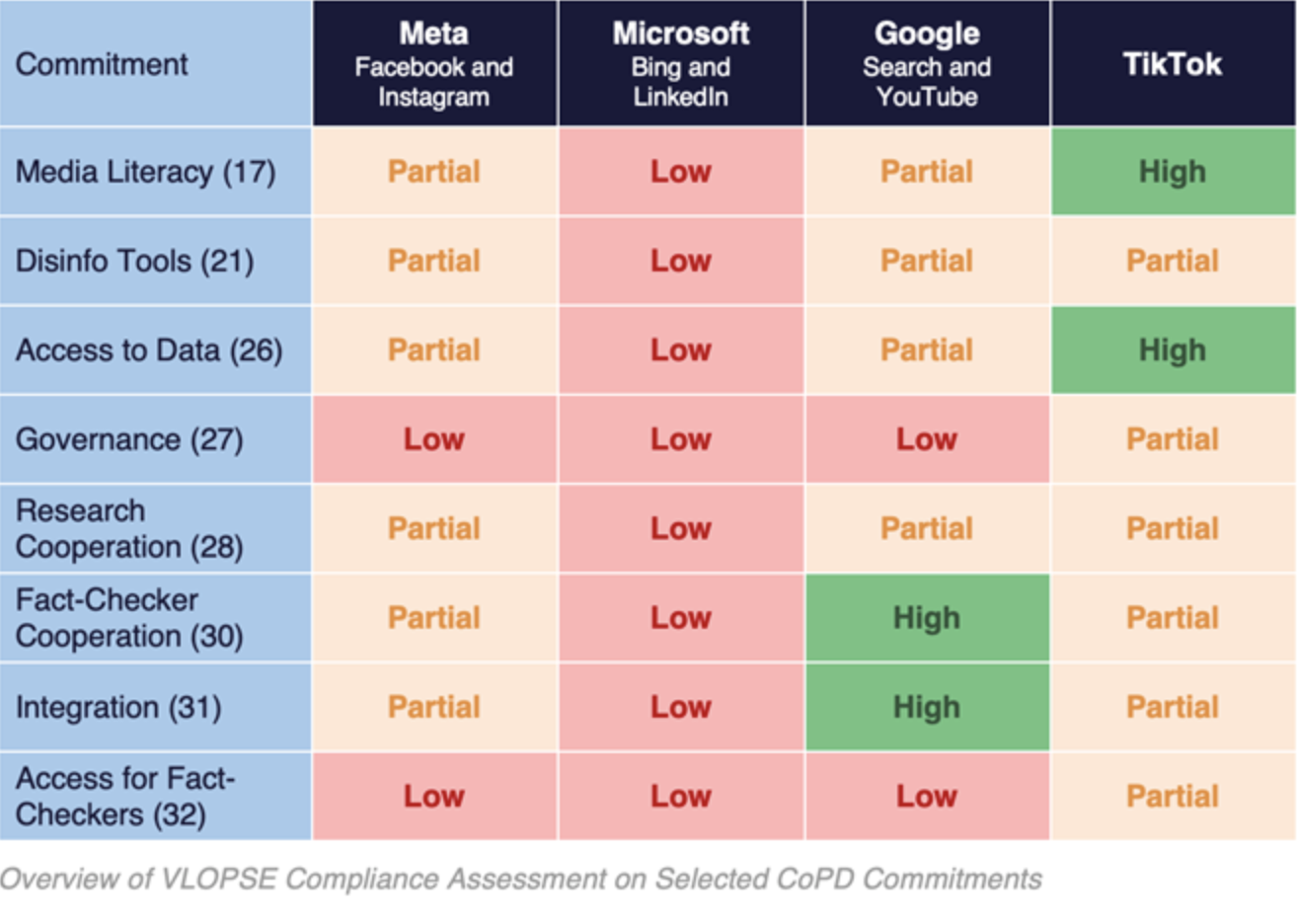

As regards the assessment of compliance, the analysis of the completeness and verifiability of transparency reports from the four major online platforms under the CoPD reveals a consistent trend of partial implementation, with uneven progress across key areas.

Empowering Users

Efforts to empower users through media literacy and content labeling (Commitment 17) vary considerably across platforms. Meta (Facebook & Instagram) demonstrates engagement with media literacy through initiatives such as We Think Digital and in-app prompts. However, these efforts lack transparency regarding their geographical scope and provide no substantive data on user engagement or measurable outcomes at the national level. Microsoft, through Bing and LinkedIn, references partnerships with services like NewsGuard and mentions participation in various campaigns, but offers no substantiated evidence of reach or effectiveness. These references come across as superficial, lacking any meaningful demonstration of impact. There are no user engagement figures, no reported outcomes, and no indication of the actual scale of these efforts.

Google’s services (Search and YouTube) show greater structural commitment, notably through prebunking initiatives and features such as “More About This Page.” However, these efforts remain largely unaccountable, as Google provides no concrete data on user reach or effectiveness. While the initiatives appear well-designed in theory, the lack of transparency around their actual performance makes it impossible to assess their real-world impact.

TikTok presents a more promising case, documenting a broader range of national campaigns and fact-checking partnerships. However, it still fails to provide country-specific detail or consistent engagement data. While it discloses limited behavioral indicators like share cancellation rates, it offers no comprehensive assessment of the overall impact of these interventions.

On tools to help users identify disinformation (Commitment 21), all platforms rely on labels, panels, or warnings, but none are willing to detail their work. Meta reports maintaining a substantial fact-checking network and applying content labels such as “False” or “Partly False.” However, it provides little evidence of how these interventions affect user behavior, offering almost nothing beyond a single figure on interrupted reshares and a few isolated engagement statistics. Microsoft limits its efforts to commercial tools like NewsGuard and unspecified AI-based detection, with no accompanying metrics. Google applies labeling mechanisms on both Search and YouTube, reporting some aggregate reach data, but fails to present user impact or behavior change metrics. TikTok combines labeling with user notifications and provides partial insights into user behavior, such as share cancellation rates, although comprehensive evaluations remain absent.

Empowering the Research Community

Regarding access to non-personal, anonymized data (Commitment 26), all platforms make at least nominal provisions, but the quality and transparency of these efforts diverge. Meta provides data access via the ICPSR (University of Michigan) but fails to disclose national-level usage or uptake metrics. Microsoft references beta programs without clear evidence of researcher access or data granularity. Google makes several research tools available, yet their utility for disinformation-specific studies remains limited. TikTok appears to make more progress in this category, offering a Research API and dashboards with documented application processes and publicly available uptake metrics by Member State, even though the usability of the provided tools remains unclear.

Governance for sensitive data access (Commitment 27) represents a weak point across all platforms. Meta, Microsoft, and Google reference pilot programs but provide no substantive public documentation on governance frameworks or participant outcomes. TikTok’s participation in the EDMO data access pilot is acknowledged, though no conclusive evidence is provided regarding the effectiveness or transparency of these governance efforts.

In terms of cooperation with researchers (Commitment 28), Meta claims to offer various tools, yet the allocation processes and prioritization criteria are opaque. Microsoft demonstrates minimal engagement in this area, lacking structured programs or support mechanisms. Google presents more comprehensive support through EMIF funding and affiliated research initiatives but is similarly hampered by governance and transparency gaps. TikTok offers extensive documentation and structured resources, but the complexity of its application procedures continues to limit effective access.

Empowering the Fact-Checking Community

Cooperation with fact-checkers (Commitment 30) shows varying degrees of commitment. Meta lists multiple activities and partnerships but offers no systematic evaluation of their impact. Microsoft provides only minimal and vague references to cooperation. In contrast, Google describes well-integrated processes, including testing methodologies and financial support through EMIF, albeit without exhaustive metrics. TikTok similarly lists partnerships and fact-checking processes but does not provide sufficient evidence of their effectiveness or external validation.

The integration of fact-checking into services (Commitment 31) shows similar patterns. Meta claims to apply labels and reduce content visibility but lacks detailed reporting on effectiveness. Microsoft provides no meaningful reporting on fact-checking integration. Google demonstrates a more systematic approach, employing panels and A/B testing to assess effectiveness. TikTok applies labels but does not clearly articulate the impact on users or content creators.

Finally, access to relevant information for fact-checkers (Commitment 32) remains poorly documented across all platforms. Meta mentions internal dashboards but provides no external verification or measurable data. Microsoft fails to report any dedicated tools or interfaces for fact-checkers. Google does not describe any specific mechanisms for information sharing. TikTok references the availability of dashboards but admits to limited depth and a lack of systematic external validation. Annex B summarizes in greater detail each platform’s actions with the critical commitments selected for analysis.

Overall, compliance of VLOPSE with the selected commitments of the Code of Practice on Disinformation remains inconsistent (see Table 2).

While some reported actions are supported by independent evidence, many lack transparency or verifiable data. Although platforms like Google and TikTok demonstrate more structured approaches in certain areas, none provide full transparency, independent verification, or robust impact reporting. Meta’s efforts are undermined by poor disclosure and the absence of meaningful impact data. While Microsoft’s performance is particularly weak across all commitments, this result should be considered in connection with the specific risk-profile of its services. Strengthening the enforcement of reporting requirements and mandating independent audits are essential steps toward improving the accountability and effectiveness of VLOPSEs’ commitments to implement the future Code of Conduct.