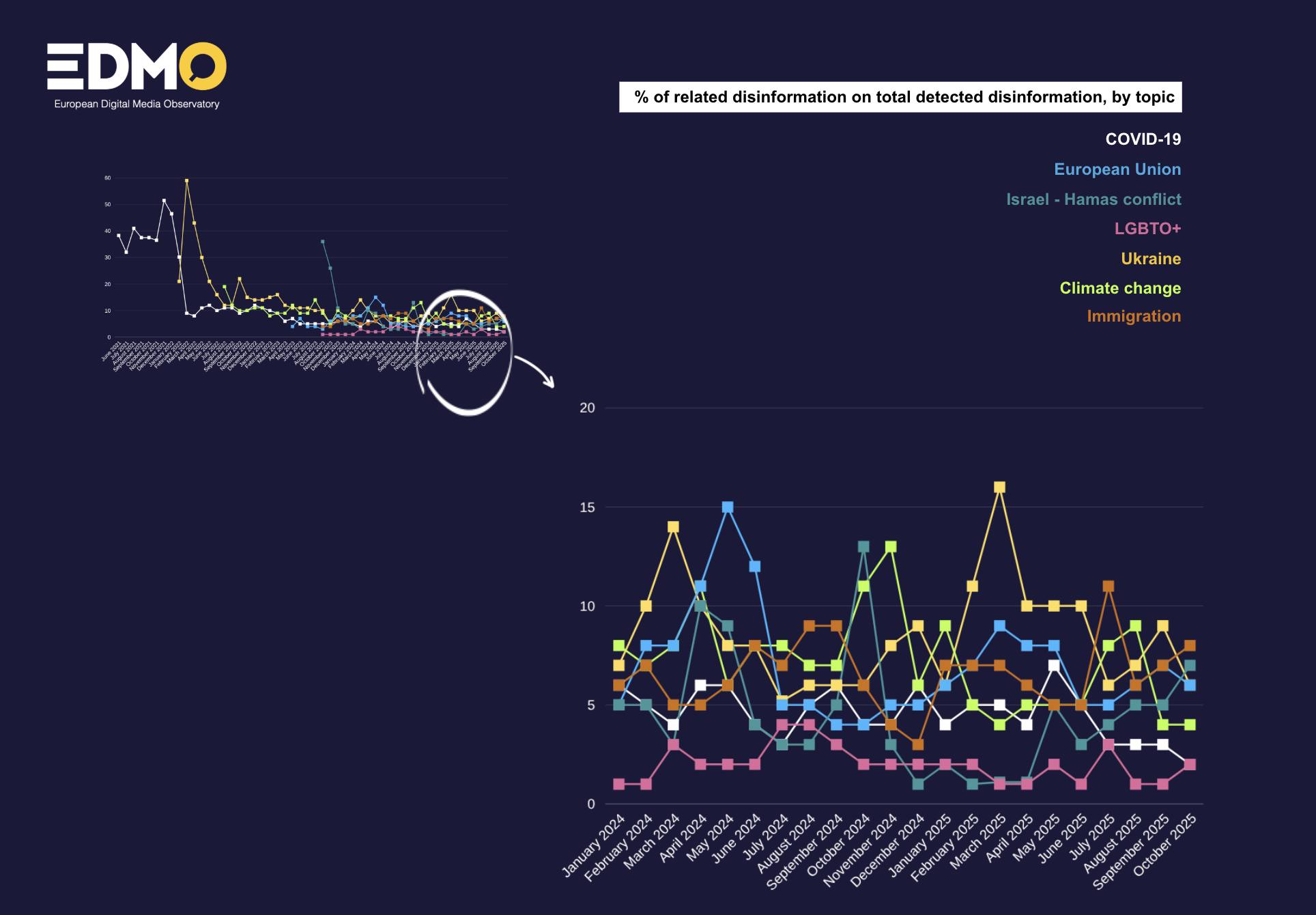

DISINFORMATION IN SEPTEMBER FOCUSES ON UKRAINE, IMMIGRATION AND THE EU

The 32 organizations* part of the EDMO fact-checking network that contributed to this brief published a total of 1.722 fact-checking articles in October 2025. Out of these articles, 132 (8%) focused on disinformation related to immigration; 114 (7%) on the crisis in Gaza; 101 (6%) on Ukraine-related disinformation; 94 (6%) on disinformation related to the EU; 74 (4%) on climate change-related disinformation; 40 (2%) on COVID-19-related disinformation; and 26 (2%) on disinformation about LGBTQ+ and gender issues.

In October, false stories related to immigration increased by one percentage point, while the share of disinformation on the crisis in Gaza rose by two percentage points. At the same time, false news on the conflict in Ukraine decreased by 3 percentage points.

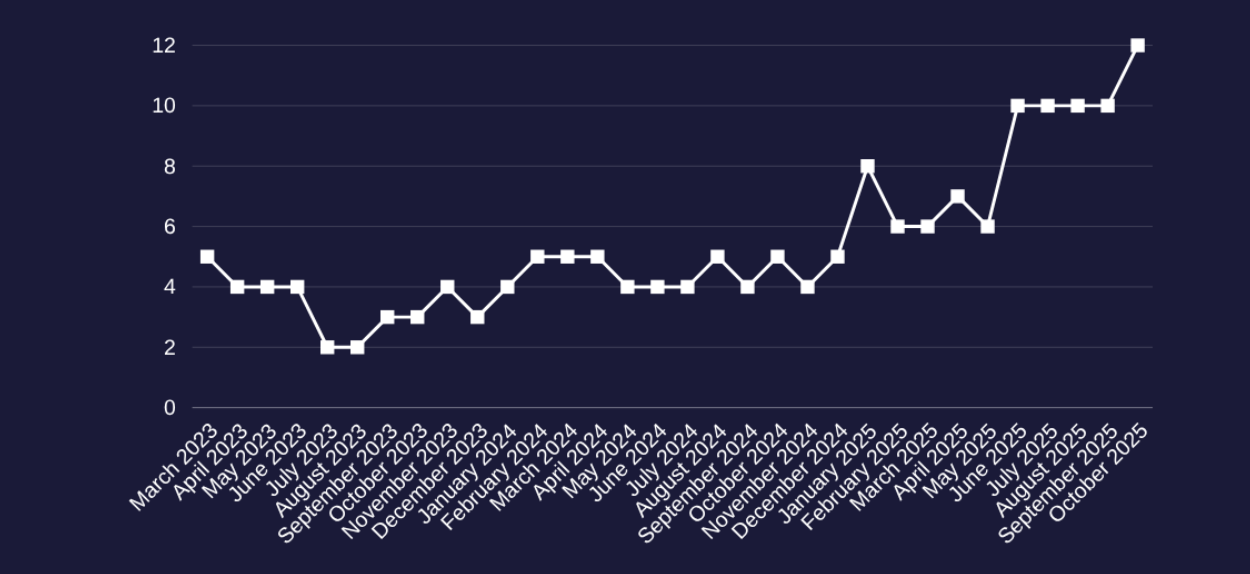

AI-GENERATED DISINFORMATION TOUCHES A NEW RECORD

The percentage of disinformation stories using AI-generated content has risen to a new record. Out of 1.722 fact-checking articles, 210 addressed the use of this technology in disinformation, representing 12% of the total.

With the launch of several AI generative software throughout the year, the share of disinformative and deceptive content generated with AI has increased. Considering the dynamics observed in previous months and the ongoing shift toward AI-generated content among many disinformation actors, it is to be expected that the share of such disinformation content will further increase in the future.

AI-generated content is often created to maximize monetisable engagement across social media platforms. It is the AI slop, and it features videos of people or animals saving babies from dangerous situations as well as other emotional or sensationalist stories that leverage human empathy and compassion to monetise. With similar purposes, there is also a big share of videos and images that aim to provide visual content for real world events such as the theft at the Louvre Museum in Paris or hurricane Melissa. In other cases, AI-generated content is also used to enhance credibility of fraudulent content.

Of course, generative AI is also weaponised to produce images and videos related to well-known disinformation narratives, such as the one targeting Ukrainian President Volodymyr Zelensky (see slide n.X). Other content also aims to spread disinformation about the conflict in Ukraine, such as the AI video in which alleged Polish soldiers claim that the drones that violated Polish airspace in September were Ukrainian. The anti-immigration and islamophobic narrative is also fueled by synthetic videos: among these examples there is one showing young people criticizing German migration policy, as well as another one depicting an alleged French police officer performing an Islamic prayer inside the Paris metro.

CLICK HERE FOR MORE INFORMATIONS ABOUT THE NEW EDMO MONTHLY BRIEF